from: Shapley value - Wikipedia

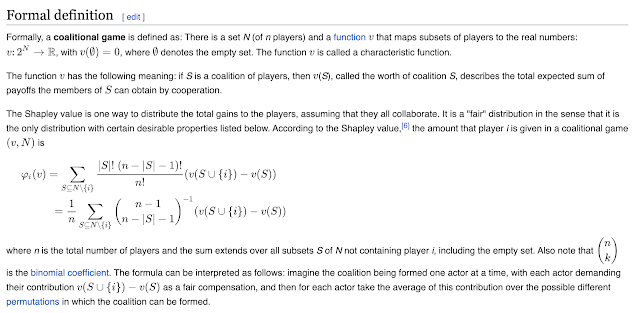

Shapley Value : This is the formula that calculates the contribution of player 's to the coalition. It ensures that each player's contribution to the total gain is acknowledged fairly. The value for player in the coalition is calculated by considering all subsets of players that do not include player and then taking into account how much player adds to each subset.

Terms in the Shapley Value Formula:

- : The number of players in subset .

- : Factorial of the number of players not in minus one.

- : Factorial of the number of players in .

- : Factorial of the total number of players.

- : The marginal contribution of player to subset , which is the difference in the value of the coalition with and without player .

- The summation extends over all subsets of that do not contain player , indicating that the Shapley value considers all possible ways a player can contribute to any coalition.

An alternative equivalent formula for the Shapley value is:

where the sum ranges over all orders of the players and is the set of players in which precede in the order . Finally, it can also be expressed as

which can be interpreted as

SHapley Additive exPlanations (SHAP):

- Origin: SHAP is a modern tool used in the field of machine learning, particularly in explainable AI, and was developed by Lundberg and Lee in 2017.

- Purpose: SHAP is used to explain the output of machine learning models by attributing the prediction of each instance to the contributions of individual features.

- Calculation: SHAP values are computed for each feature for each prediction, reflecting how much each feature contributed to the prediction relative to a baseline. It uses Shapley values to provide these explanations.

- Properties: SHAP inherits the properties of Shapley values but applies them in the context of a predictive model. It ensures that feature attributions are consistent with the model output.

- Practicality: Computing exact Shapley values for machine learning models can be extremely computationally expensive, especially for complex models with many features. SHAP introduces efficient algorithms and approximations to estimate Shapley values in a practical time frame.

In essence, SHAP adapts the concept of Shapley values to the domain of machine learning. While Shapley values were originally intended for human players in a coalition game, SHAP applies the same principles to the "players" of a machine learning model, which are the features used for making predictions. SHAP is particularly focused on providing local explanations, meaning it explains individual predictions with an additive feature attribution method that respects the Shapley value properties.

This makes SHAP a powerful tool for interpreting complex models, as it breaks down predictions into understandable contributions from each feature, allowing users to understand the decision-making process of the model, thereby increasing transparency and trust.

The SHAP base value, also known as the expected value, is a key component in SHAP (SHapley Additive exPlanations) values calculations. It represents the average prediction of the model over the training dataset12.

In other words, if you were to make a prediction without knowing any features, the best guess would be the base value. This is essentially the prediction that the model would make if it did not have any feature information available12.

For example, in a binary classification problem, the base value could be the log-odds of the average of the outcome variable in the training set1. In a regression problem, the base value could be the average of the target variable across all the records3.

It’s important to note that the base value can vary depending on the link function used. For instance, when using the identity link function, the base value is in the raw score space. If you wish to switch to the probability space, you would need to apply the appropriate transformation, such as the sigmoid function for logistic regression2.

In the context of SHAP values, the base value serves as the starting point for the allocation of feature contributions. Each feature’s SHAP value then represents how much that feature changes the prediction from the base value12.

![{\displaystyle \varphi _{i}(v)={\frac {1}{n!}}\sum _{R}\left[v(P_{i}^{R}\cup \left\{i\right\})-v(P_{i}^{R})\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/02ee9b216534044b6aa3677fde588be330956412)

No comments:

Post a Comment